From the earliest times of the human race, nature had always appeared to be shrouded in mystery. In those days, however, the shroud of mystery was, for the most part, a result of our ignorance and lack of knowledge. Without knowledge, everything seemed to appear like magic, and nothing seemed to have a reason. It was the wit of the great Greek philosopher Aristotle that unveiled the secret to the magic trick. He “claims” to have pioneered the concept of “logic.” He illustrated that there is a reason why something looks the way it does, acts the way does, and why it is there. He suggested combining evidence(s) to arrive at a new conclusion(s). This is more commonly known as a syllogism; to come to new conclusions by combining evidence. It is tough for us even to imagine what Aristotle’s idea must have done to the people of the early times; it lifted the mystic fog off everyday things that they saw around them and gave them a mind sharp like a knife. The impressive amount of scientific knowledge that we have accumulated in the short span compared to the duration of our existence, from that time until now, as they say, is history and a remarkable one. The simple concept of Aristotle changed humanity’s face and, as I like to believe, truly promoted us to the category of intelligent species in the Universe, as far as scientific development is concerned. Building upon this concept, scientists, physicists, in wide disciplines made monumental accomplishments in understanding Nature until the end of the 19th century.

At the start of the 19th century, the traditional understanding of Physics, which historians and physicists like to call deterministic physics, faced incredible challenges as we, as a race, started asking fundamental questions about the nature of the subatomic world. It became clear, beyond a doubt, that it was impossible to describe the subatomic world with deterministic Newtonian Physics. This lead to the birth of Quantum Mechanics: a set of mathematical tools and concepts that could model the behavior of the subatomic world of atoms, electrons, and fundamental particles. I will not dare say that it explains the subatomic world because a complete, conceptual, understanding of the quantum world is still far from us. All we can do is calculate the outcomes of controlled experiments and match them with theoretical predictions, and claim a victory because they agree well (extremely well!). While that might sound superficial to some people, it suffices as far as progress is concerned and, to convince oneself, one only needs to observe the marvels of Electronics in today’s world. The fundamental tenet of Quantum Mechanics is Heisenberg’s Uncertainty principle. It posits that it is impossible to know all the information about a subatomic particle precisely. For example, you can either know its position accurately with lesser information about its momentum or accurate information about its momentum but with poor accuracy in position. This principle does not only apply to these two “observables,” but the principle can be extended to pairs of other observables as well. These pairs are called non-commutating Quantum Mechanical observable (operators to be technically correct, but you can ignore the difference). With the Uncertainty principle, there is a fundamental barrier (a curtain as I like to think) baring us from obtaining absolute knowledge of particles’ nature on the subatomic scales.

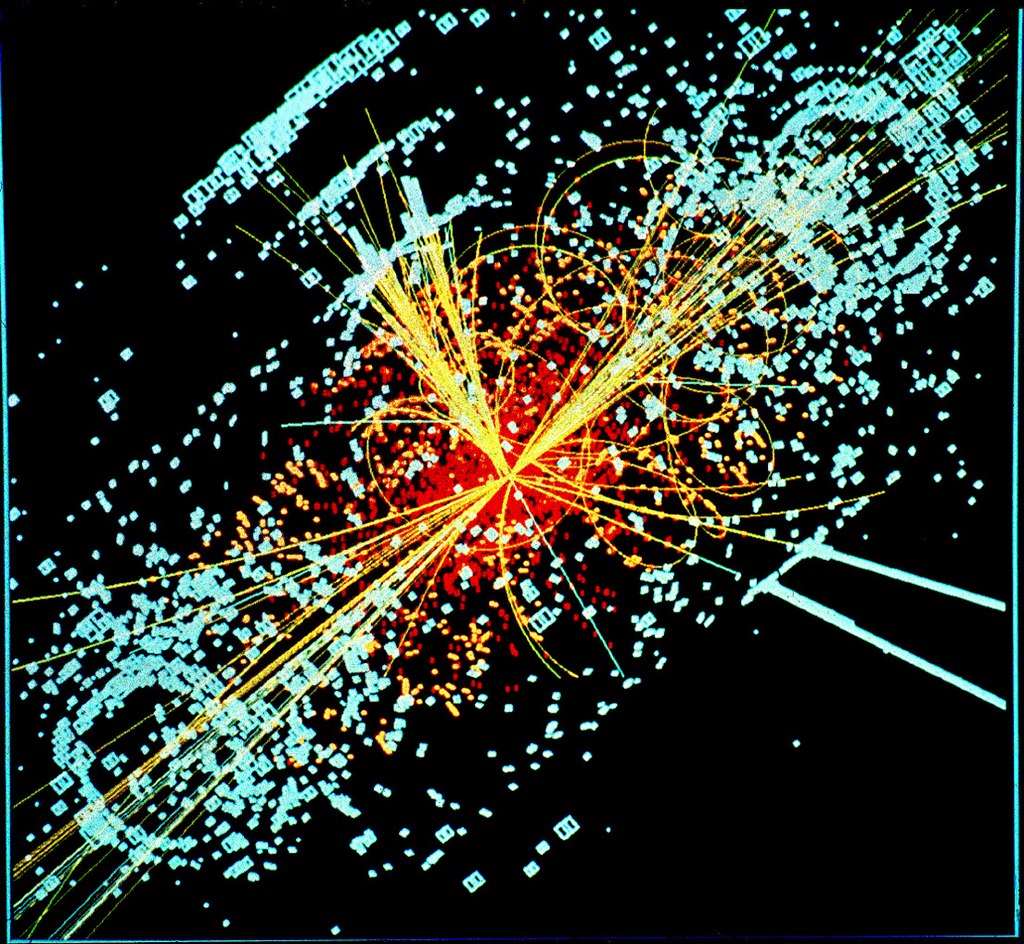

A simulated image of particle collision in the CMS Particle Detector at the LHC. Produced by CERN, shared under Creative Common License

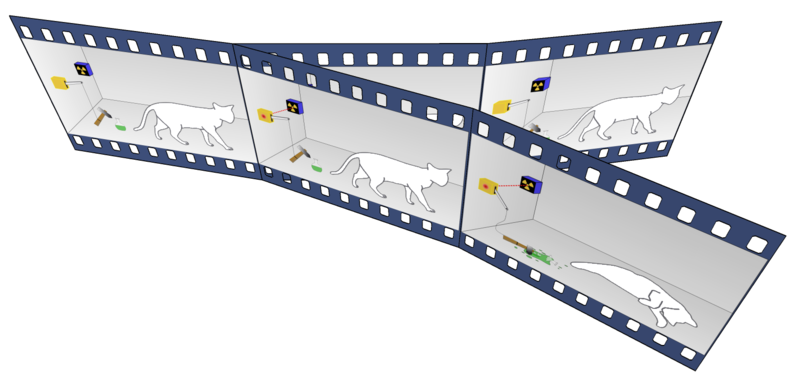

An illustration of the famous Schrodinger’s cat paradox. Source: Wikipedia, shared under creative commons license.

In the mid to late part of the 20th century, cosmology had started to become a proper field of Science, experimentally and theoretically. Cosmologists created solid theoretical foundations for cosmological models, based on quantifiable quantities called the cosmological parameters. Cosmologists had put the question of the beginning of the Universe in the form of testable theories. Meanwhile, technical advances in engineering lead to incrementally precise measurements of the sky, such as the temperature of the sky (first predicted in 1948 by Ralph Alpher and Robert Herman and then later discovered in 1964, by Arno Penzias and Robert Woodrow Wilson), so-called Cosmic Microwave Background or CMB. It has been established without question that the CMB is the remains from the time when the Universe was extremely hot (10^32 Centigrade). The CMB gives us a glimpse of the Universe when it was about 380,000 years old (as opposed to now, when its age is best estimated to be 13.6 billion years).

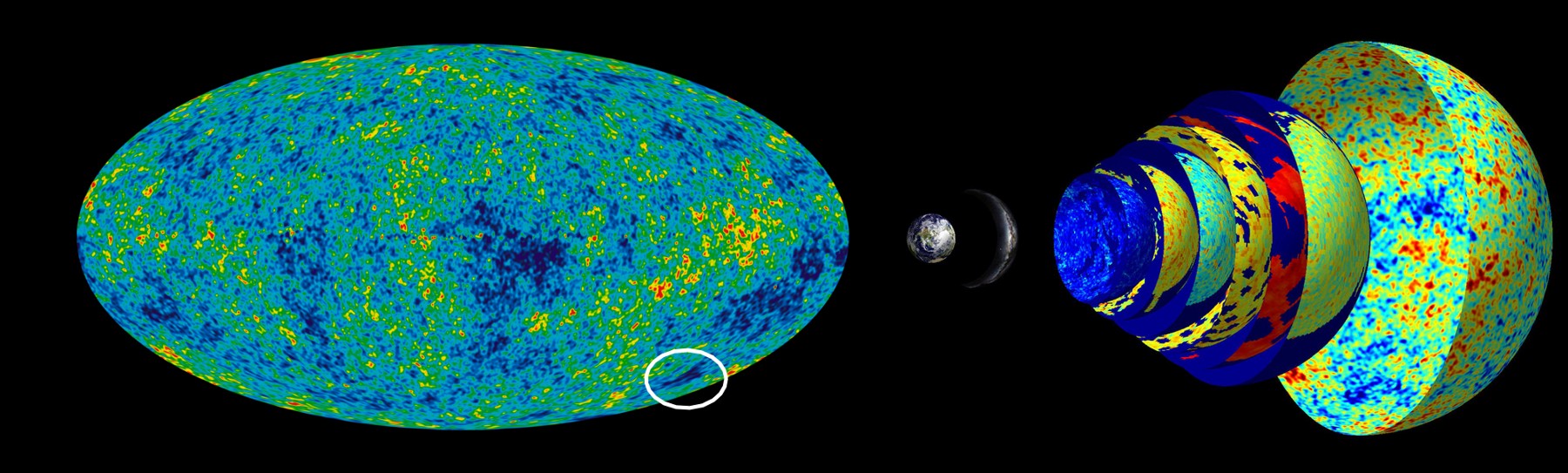

The WMAP image of the CMB on the left shows the cold spot outlined in white. To the right is a view of how the CMB would appear. We measure the distribution of the CMB radiation on an imaginary sphere called the celestial sphere. The image on the left is the 2D map of the celestial sphere, called the Mollewide projection. It uses the same technique which is used to make a 2D map of the Earth. Image Credit: NASA shared under Creative Common License

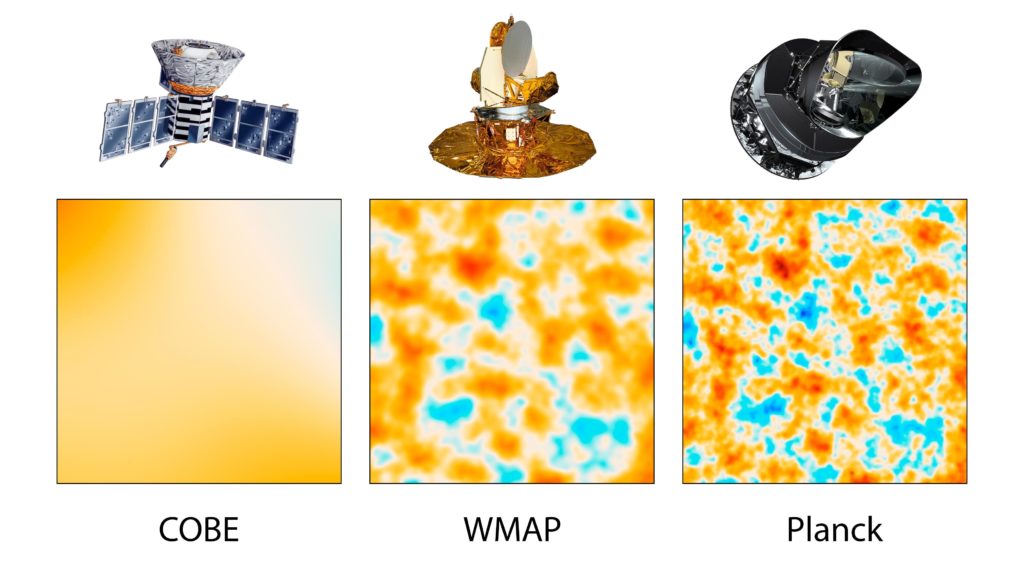

Progressive improvement in the measurement of the temperature distribution on the sky from the COBE mission to, the latest, Planck mission. Each panel is a small portion of the sky (shown in the previous image as Mollewide view) roughly the size of 10-square-degree Image credit: NASA/JPL-Caltech/ESA, Public domain, via Wikimedia Commons under Creative Common License

The CMB measurement has been cosmologists’ primary probe to study the properties of the early universe. However, as you can imagine, that the CMB only gives you part of the information of our Universe which concerns the early time of its evolution. The other part of the information comes from the late-Universe i.e. the time when we exist (among, perhaps, many other species). At the present time, we have the CMB radiation (which has been made very weak due to the expansion of the Universe), and we also have matter (regular or some exotic form that we are still unaware of) that has clumped to form galaxies and the galaxies clumped into structures on various distance scales (walls, filaments, clusters, mega clusters, etc.). So the question that naturally arises is how much can be learned from the distribution of temperature and galaxies in the sky? More importantly, what we aim to learn from these so-called cosmic probes. Well, for one we would like to test various theoretical models which embody our understanding of the evolution of the Universe. This understanding is based on physics laws, which we have learned in our long history of Physics (from Issac Newton to Alber Einstein and all the way to Stephen Hawking, and ALL the physicists that have existed in these times, since advancement in scientific knowledge is very, if not never, rarely the achievement of one person). It is obvious right from the start that any study of the sky, whether it involves the distribution of temperature of galaxies, has to be of statistical nature due to the sheer size of the sky around us (yes, not only above us). This very nature of the study of the Universe posits a frustratingly intriguing problem for us: We have only one sky!!! You might be thinking, “Yeah, so?”, I know at least I did! This fact is implicitly obvious for us or anyone ever born anywhere in the Universe. But, what exactly is the problem? Let me try to break the problem down by replacing this problem with another “toy” problem that might be simpler to imagine.

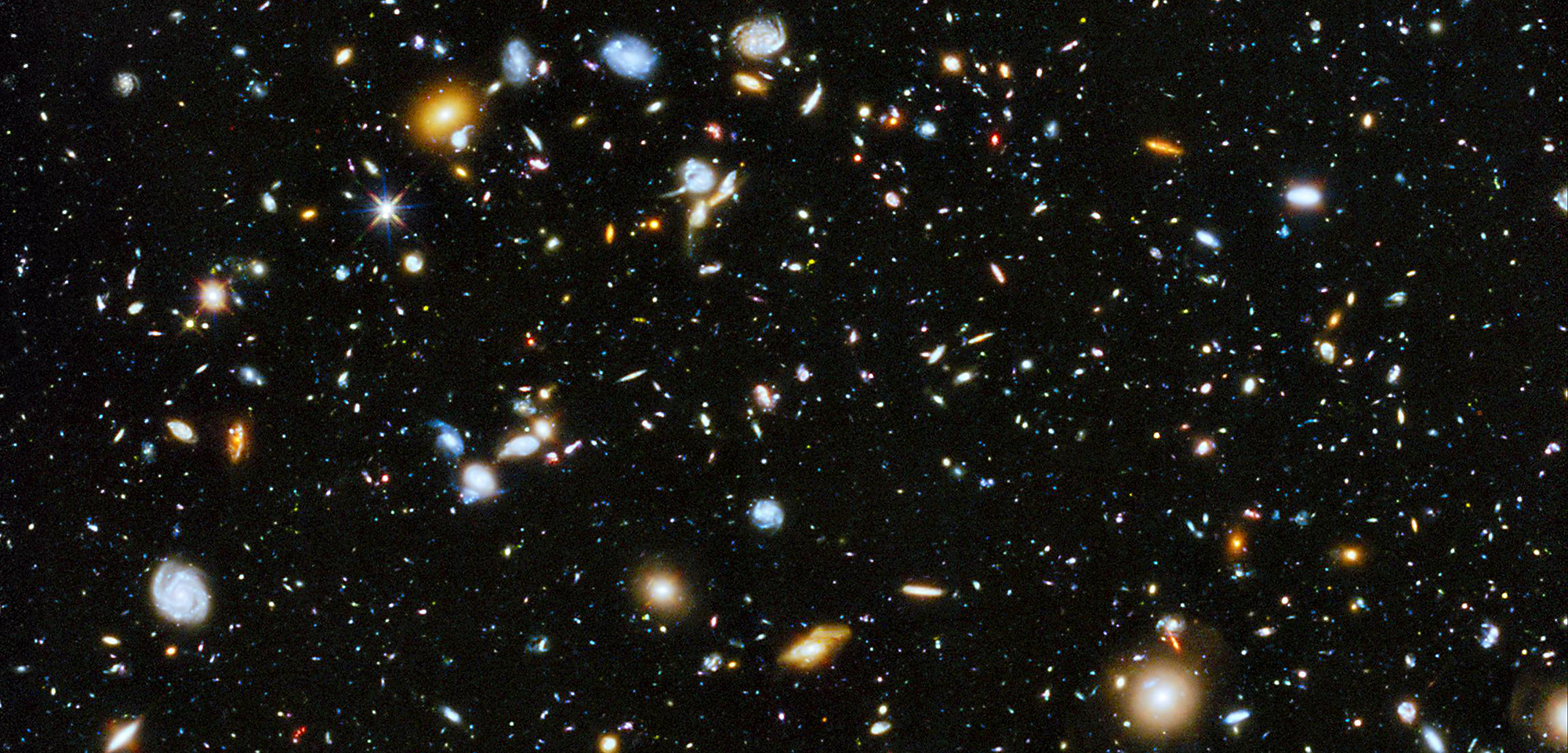

Hubble Ultra Deep Field. Image Credit: NASA/ESA shared under Creative Common License

Suppose you want to test the purity of water distributed by a certain company which we will call “Company A” (replace A with any company of your liking, I am not being sponsored by any company!). You can define purity in many different ways, but that definition is not important here. One can take one bottle of water produced by the said company, and take a small amount of water from the bottle and test its purity from a lab, and you will get an answer. Of course, since you took only one sample, there will be a large error on your measured purity (as errors are inherent to any measurement made with any machine, we call these errors experimental uncertainty). So, how do you reduce the error to make a better measurement? Ok, so one obvious way around this is to take more samples from the bottle, say 10000 samples. That should be large enough to reduce the experimental uncertainty, no? For sure, yes. But, do you feel testing only one bottle is fair to judge the quality of the water from Company A? After all, like any other manufactured product, there will be some random fluctuations in quality in the final product as no manufacturing process produces EXACTLY identical copies of a product. Well, ok, you can say that we take 10000 samples each from 1000 bottles produced by the company. That is a HUGE amount of data, 10000 x1000 measurements!!! That sounds fair for the problem at hand. You might already be able to see the problem with our cosmological measurements: We don’t have 1000 skies!!! We have only one!! This means that you cannot reduce the uncertainty in cosmological measurements and, hence, the information inferred from these measurements, beyond a certain limit. This fundamental lower limit on uncertainty is called Cosmic Variance.

So it seems, strangely enough, we have run into a problem similar to the one we had with Quantum Mechanics on the tiny scale of the Universe, only this time it’s on the far end of the distance spectrum. There seems to be a fundamental limit to how much you can learn about the nature of the Universe, both on the large cosmic scale and the small quantum mechanical scale. Whether the source of this “coincidence” is common is hard to say at the moment, to speak in all generality. For even the most mildly curious people, it is hard not to imagine the possible implications of this “coincidence.” The implication can smark the minds of faculties from science to philosophy to religion. However, before letting the mind become too active, it might also be useful to keep in mind that the mathematics that dictates us these “limitations” are in themselves subject to agreement on certain principles called axioms. Can a different set of axiom mitigate this restriction, one or both. However, such a possibility can raise very big questions about everything that we have done in mathematics and applied mathematics as an intelligent species. Of course, that is no reason to not think about it. Whatever it may come out to be, for now, it appears that we, together with all our genius, wit, intellect (and what not) we are surrounded by a great cosmic curtain on the largest as well as the smallest distance scales on the universe.

About the Author

The author, Shahbaz Alvi, is a Ph.D. student at the University of Ferrara in the subject of Cosmology. His current project focuses on studying how the distribution of galaxies on large distance scales can be used to infer information about cosmological parameters and how the information about galaxies, such as their redshift, can be inferred from a galaxy survey. He is also an INFN associate and works as a member of the Euclid Consortium which is an upcoming galaxy survey involving ESA and NASA. He has been an active member of KAS since 2015.